I have been wondering whether it would be possible to run some local language models on uConsole. But I never thought it would be possible as I have tried Llama2 7b on an Intel N95 device last semester as school project and it was not ideal.

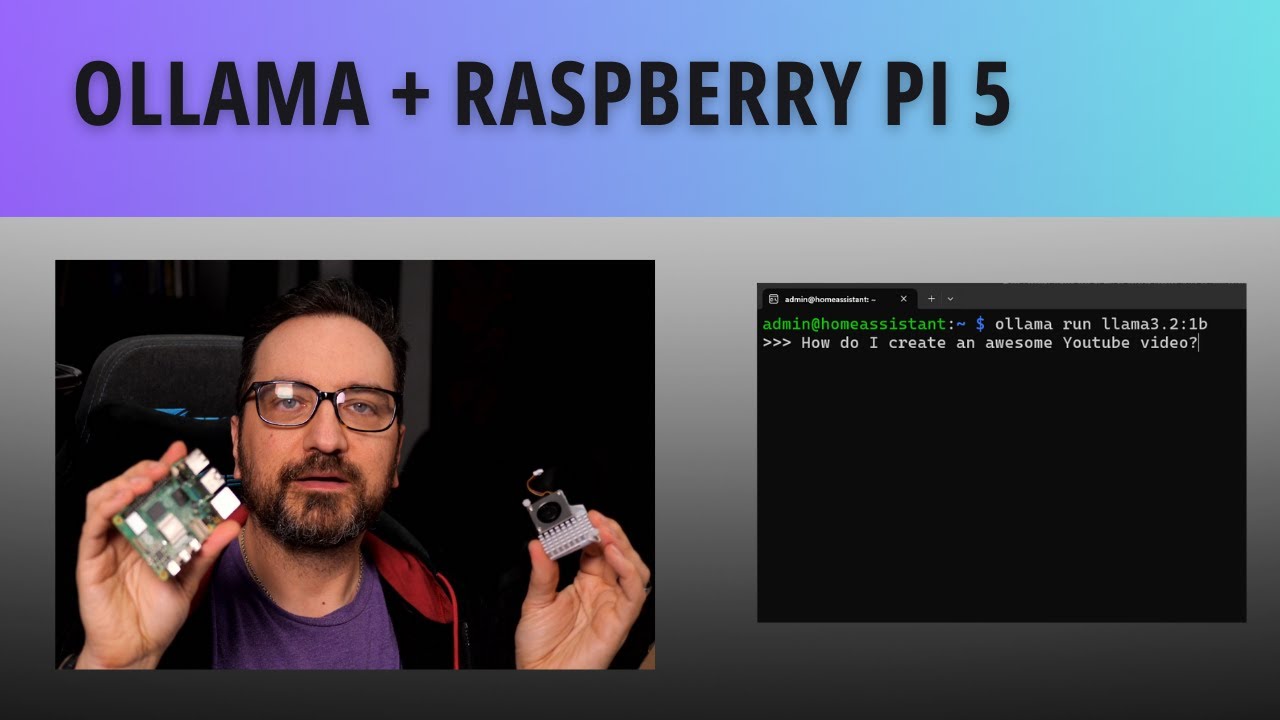

Luckily, I came across this video comparing Pi 4 with Pi 5 running Llama and realized that the newer Llama 3.2:1b takes significantly less resources to run.

Then I tried it and it works. About 2 to 3 tokens per second, which is usable.

But CM5 would be so much better so I am looking forward to it down the way.

I also have tried Phi3 and Llama3.2:3b. They are usable as well, but you would have to wait a significantly longer time, like 1 to 1.5 tokens per second.